Intelligent GIF Search: Finding the right GIF to express your emotions

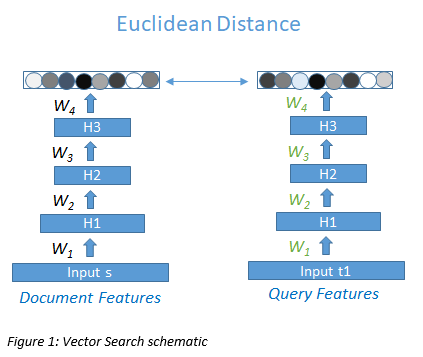

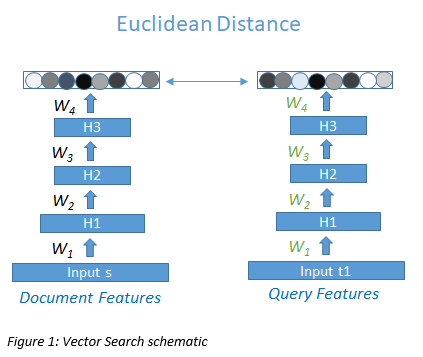

Vector Search and Word Embeddings for Images to Improve Recall

GIF Summarization and OCR algorithms for improving precision

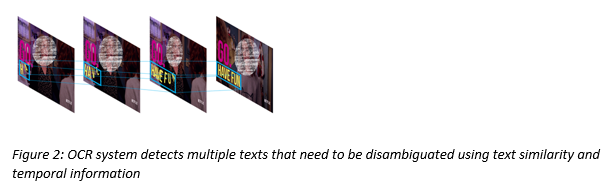

The multi-frame nature of a GIF introduces additional complexities for OCR as well. For example, an OCR system would look at the images below, and detect four different pieces of text – “HA”, “HAVE”, “HAVE FU” and “HAVE FUN”. In fact there’s just one piece of text – “HAVE FUN”. We use text similarity combined with spatial and temporal information to disambiguate such cases.

Sentiment Analysis using text – to improve results quality

To understand the sentiment for GIF documents, we analyze the text that surrounds the GIF documents on web pages. Having the sentiment for both the query and documents, we can match the sentiment of the user query and the results they see. For instance, if a user issues the query “good job”, and we’ve already detected text like “Good job 😊 😊 ” on chat sites, we would infer that “Good job” is a query with positive sentiment and choose the GIFs documents with positive sentiment.

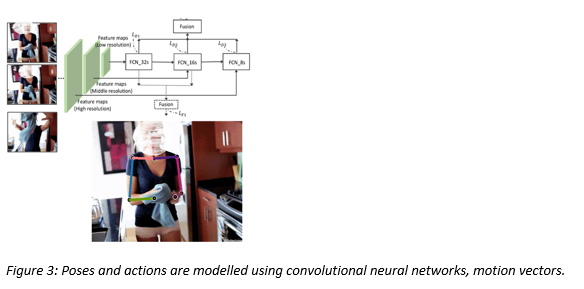

Expressiveness, Pose and Awesomeness using CNNs

Poses can be modeled using the positions of skeleton points, such as head, shoulder, hand etc. Actions can be modeled using the motion of these points across frames. To extract features to depict human poses and actions, we estimate the skeleton point positions in each frame and estimate the motion across adjacent frames. A full convolutional network is deployed for estimating each skeleton point of the upper body. The motion vectors of these skeleton points are extracted to depict the motion information. A final model deduces the ‘awesomeness’ by examining the poses and the actions in the GIF.

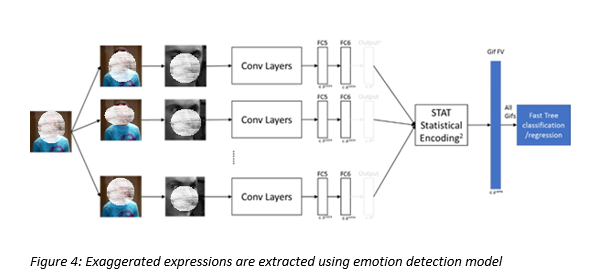

Exaggerated expressions

Here we analyze the facial expression of the subject to select results with more exaggerated facial expressions. We extract the expressions from multiple frames of the GIF and compute a score that indicates the level of exaggeratedness. Our GIF search returns results that have more exaggerated facial expressions.

By pairing deep convolutional neural networks, expressiveness, poses, actions and exaggeratedness models with our huge celebrity database, we can return awesome results for celebrity searches.

Image graph and other techniques

In addition to helping understand semantic relationships, Image Graph also improves ranking quality. Image Graph is made up of several clusters of similar images (in this case, GIFs), and has historical data (for e.g. clickthrough rate etc.) for images. As shown in the graph below, the images within the same cluster are visually similar (the distance between images denotes similarity), and the distance between the clusters denotes visual similarity of the main images within the clusters. Now, if we know that an image in cluster D was extremely popular, we can propagate that clickthrough rate data to all other GIFs in cluster D. This greatly improves ranking quality. We can also improve the diversity of the recommended GIFs using this technique.

Finally, we also consider source authority, virality and popularity while deciding which GIFs to show on top. And, we have a detrimental content classifier (based on images, and another based on text) to remove offensive content to ensure that all our top results are clean.

There you have it – did you really imagine that so many machine learning techniques are required to make GIF ranking work? Altogether, these components bring intelligence to Bing’s GIF search experience, making it easier for users to find what they’re looking for. Give it a try on Bing image search.

Source: Bing Blog Feed