Always-on, real-time threat protection with Azure Cosmos DB – part one

This two-part blog post is a part of a series about how organizations are using Azure Cosmos DB to meet real world needs, and the difference it’s making to them. In part one, we explore the challenges that led the Microsoft Azure Advanced Threat Protection team to adopt Azure Cosmos DB and how they’re using it. In part two, we’ll examine the outcomes resulting from the team’s efforts.

Transformation of a real-time security solution to cloud scale

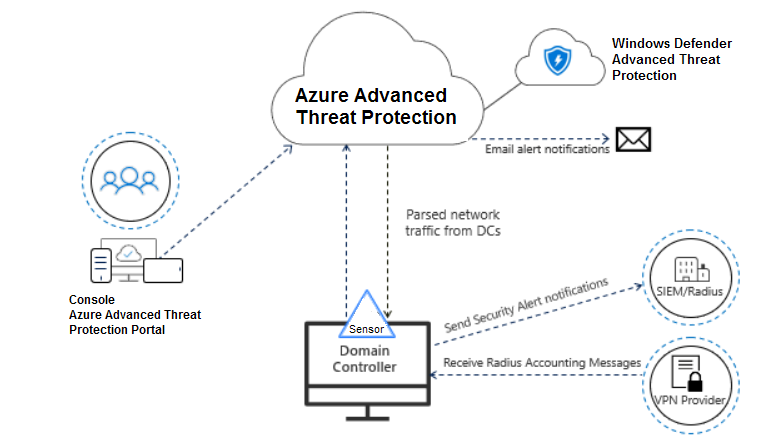

Microsoft Azure Advanced Threat Protection is a cloud-based security service that uses customers’ on-premises Azure Active Directory signals to identify, detect, and investigate advanced threats, compromised identities, and malicious insider actions. Launched in 2018, it represents the evolution of Microsoft Advanced Threat Analytics, an on-premises solution, into Azure. Both offerings are composed of two main components:

- An agent, or sensor, which is installed on each of an organization’s domain controllers. The sensor inspects traffic sent from users to the domain controller along with Event Tracing for Windows (ETW) events generated by the domain controller, sending that information to a centralized back-end.

- A centralized back-end, or center, which aggregates the information from all the sensors, learns the behavior of the organization’s users and computers, and looks for anomalies that may indicate malicious activity.

Advanced Threat Analytics’ center used an on-premises instance of MongoDB as its main database—and still does today for on-premises installations. However, in developing the Azure Advanced Threat Protection center, a managed service in the cloud, Microsoft needed something more performant and scalable. “The back-end of Azure Advanced Threat Protection needs to massively scale, be upgraded on a weekly basis, and run continuously-evolving, advanced detection algorithms—essentially taking full advantage of all the power and intelligence that Azure offers,” explains Yaron Hagai, Principal Group Engineering Manager for Advanced Threat Analytics at Microsoft.

In searching for the best database for Azure Advanced Threat Protection to store its entities and profiles—the data learned in real time from all the sensors about each organization’s users and computers—Hagai’s team mapped out the following key requirements:

- Elastic, per-customer scalability: Each organization that adopts Azure Advanced Threat Protection can install hundreds of sensors, generating potentially tens of thousands of events per second. To learn each organization’s baseline and apply its anomaly detection algorithms in real-time, Azure Advanced Threat Protection needed a database that could efficiently and cost-effectively scale.

- Ease of migration: The Azure Advanced Threat Protection data model is constantly evolving to support changes in detection logic. Hagai’s team didn’t want to worry about constantly maintaining backwards compatibility between the service’s code and its ever-changing data model, which meant they needed a database that could support quick and easy data migration with almost every new update to Azure Advanced Threat Protection they deployed.

- Geo-replication: Like all Azure services, Advanced Threat Protection must support customers’ critical disaster recovery and business continuity needs, including in the highly unlikely event of a datacenter failure. Through the use of geo-replication, customers’ data can be replicated from a primary datacenter to a backup datacenter, and the Azure Advanced Threat Protection workload can be switched to the backup datacenter in the event of a primary datacenter failure.

A managed, scalable, schema-less database in the cloud

The team chose Azure Cosmos DB as the back-end database for Azure Advanced Threat Protection. “As the only managed, scalable, schema-less database in Azure, Azure Cosmos DB was the obvious choice,” says Hagai. “It offered the scalability needed to support our growing customer base and the load that growth would put on our back-end service. It also provided the flexibility needed in terms of the data we store on each organization and its computers and users. And it offered the flexibility needed to continually add new detections and modify existing ones, which in turn requires the ability to constantly change the data stored in our Azure Cosmos DB collections.”

Collections and partitioning

Of the many APIs that Azure Cosmos DB supports, the development team considered both the SQL API and the Azure Cosmos DB API for MongoDB for Azure Advanced Threat Protection. Eventually, they chose the SQL API because it gave them access to a rich, Microsoft-authored client SDK with support for multi-homing across global regions, and direct connectivity mode for low latency. Developers chose to allocate one Azure Cosmos DB database per tenant, or customer. Each database has five collections, which each start with a single partition. “This allows us to easily delete the data for a customer if they stop using Azure Advanced Threat Protection,” explains Hagai. “More importantly, however, it lets us scale each customer’s collections independently based on the throughput generated by their on-premises sensors.”

Of the set of collections per customer, two usually grow to more than one partition:

- UniqueEntity, which contains all the metadata about the computers and users in the organization, as synchronized from Active Directory.

- UniqueEntityProfile, which contains the behavioral baseline for each entity in the UniqueEntity collection and is used by detection logic to identify behavioral anomalies that imply a compromised user or computer, or a malicious insider.

“Both collections have very high read/write throughput with large Request Units per second (RU/s) consumption,” explains Hagai. “Azure Cosmos DB seamlessly scales out storage of collections as they grow, and some of large customers have scaled up to terabytes in size per collection, which would have not been possible with MongoDB on VMs.”

The other three collections for each customer typically contain less than 1,000 documents and do not grow past a single partition. They include:

- SystemProfile, which contains data learned for the tenant and applied to behavioral based detections.

- SystemEntity, which contains configuration information and data about tenants.

- Alert, which contains alerts that are generated and updated by Azure Advanced Threat Protection.

Migration

As the Azure Advanced Threat Protection detection logic constantly evolves and improves, so does the behavioral data stored in each customer’s UniqueEntityProfile collection. To avoid the need for backwards compatibility with outdated schemas, Azure Advanced Threat Protection maintains two migration mechanisms, which run with each upgrade to the service that includes changes to its data models:

- On-the-fly: As Azure Advanced Threat Protection reads documents from Azure Cosmos DB, it checks their version field. If the version is outdated, Azure Advanced Threat Protection migrates the document to the current version using explicit transformation logic written by Hagai’s team of developers.

- Batch: After a successful upgrade, Azure Advanced Threat Protection spins up a scheduled task to migrate all documents for all customers to the newest version, excluding those that have already been migrated by the on-the-fly mechanism.

Together, these two migration mechanisms ensure that after the service was upgraded and the data access layer code was changed, no errors will occur due to parsing outdated documents. No backwards compatibility code is needed besides the explicit migration code, which is always removed in the subsequent version.

Automatic scaling and backups

Collections with very high read/write throughput often are rate-limited as they reach their provisioned RU/s limits for a collection. When one of the service’s nodes, each node is a virtual machine, tries to perform an operation against a collection and gets a “429 Too Many Requests” rate limiting exception, it uses Azure Service Fabric remoting to send a request to a centralized auto-scale service for increased throughput. The centralized service aggregates such requests from multiple nodes to avoid increasing throughput more than once within a short window of time, as this may be caused by a single burst of throughput that affects multiple nodes. To minimize overall RU/s costs, a similar, periodic scale-down process reduces provisioned throughput when appropriate, such as during each customer’s non-working hours.

Azure Advanced Threat Protection takes advantage of the auto-backup feature of Azure Cosmos DB to help protect each of the collections. The backups reside in Azure Blob storage and are replicated to another region through the use of geo-redundant storage (GRS). Azure Advanced Threat Protection also replicates customer configuration data to another region, which allows for quick recovery in the case of a disaster. “We do this primarily to safeguard the sensor configuration data—preventing the need for an IT admin to reconfigure hundreds of sensors if the original database is lost,” explains Hagai.

Azure Advanced Threat Protection recently began onboarding full geo-replication. “We’ve started to enable geo-replication and multi-region writes for seamless and effortless replication of our production data to another region,” says Hagai. “This will allow us to further improve and guarantee service availability and will simplify service delivery versus having to maintain our own high-availability mechanisms.”

Continue on to part two, which covers the outcomes resulting from the Azure Advanced Threat Protection team’s implementation of Azure Cosmos DB.

Source: Azure Blog Feed