Regenerative Maps alive on the Edge

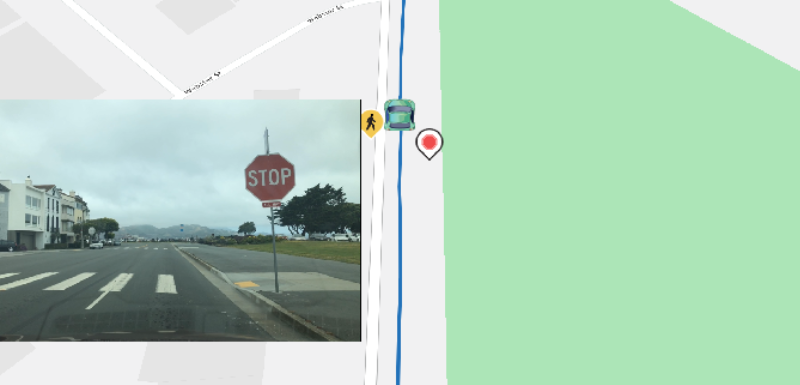

This week Mapbox announced it will integrate its Vision SDK with the Microsoft Azure IoT platform, enabling developers to build innovative applications and solutions for smart cities, the automotive industry, public safety, and more. This is an important moment in the evolution of map creation. The Mapbox Vision SDK provides artificial intelligence (AI) capabilities for identifying objects through semantic segmentation – a technique of machine learning using computer vision that classifies what things are through a camera lens. Semantic segmentation on the edge for maps means objects such as stop signs, crosswalks, speed limits signs, people, bicycles, and other moving objects can be identified at run time through a camera running AI under the covers. These classifications are largely referred to as HD (high definition) maps.

HD maps are more machine friendly as an input to autonomous vehicles. Once the HD map objects are classified, and because other sensors like GPS and accelerometer are onboard, the location of these objects can be registered and placed onto a map, or in the advancement of “living maps,” registered into the map at run time. This is an important concept and where edge computing intersects with location to streamline the digitization of our world. Edge computing, like what will be powered by Azure IoT Edge and the Mapbox Vision SDK, will be enablers of map data generation in real time. What will be critical is to allow these HD maps to be (1) created through semantic segmentation; (2) integrated into the onboard map; (3) validated with a map using CRUD (create, read, update, delete) operations and then distributed back to devices for use. Microsoft’s Azure Machine Learning services can enable the training for these AI modules to support the creation of additional segmentation capabilities, not to mention the additional Azure services which provide deeper analysis of the data.

The advent and adoption of HD maps leads us to a different challenge – infrastructure. With 5G networks on the way, we’re seeing autonomous driving test vehicles create upwards of 5TB of data each per day. With scale and the required millions of miles of training, not to mention the number of vehicles, we estimate daily data rates to be in the 10’s of petabytes per day. Even a 5G network won’t be able to support that amount of data transference, and it certainly won’t be cheap. As such, edge computing and artificial intelligence will be some of the leading technologies for powering autonomous vehicles, the Location of Things, and smart city technologies.

A natural place to start connecting digital dots is location. Microsoft’s collaboration with Mapbox illustrates our commitment to cloud and edge computing in conjunction with the proliferation of Artificial Intelligence. The Mapbox Vision SDK, plus Azure IoT Hub and Azure IoT Edge, simplifies the flow of data from the edge to the Azure cloud and back out to devices. Imagine hundreds of millions of connected devices all making and sharing real time maps for the empowerment of the customer. This is a map regenerating itself. This is a live map.

Microsoft Azure is excited to collaborate with Mapbox on this because we understand the importance of geospatial capabilities for the Location of Things, as demonstrated in our own, continued investment in Azure Maps. Azure Maps as a service on the Azure IoT platform is bringing the Location of Things to Microsoft enterprise customers and developers through a number of location based services and mapping capabilities with more to come.

Source: Azure Blog Feed