How news platforms can improve uptake with Microsoft Azure’s Video AI service

I’m Anna Thomas, an Applied Data Scientist within Microsoft Engineering. My goals are to enable the field and partners to better integrate various AI tools into their applications. Recently, my team reached out to Microsoft News to see how they’re analyzing their data, and how our services may be able to help.

Microsoft News ingests more than 100,000 articles and videos every day from various news providers. With so many different aspects such as classifying news topics, tagging and translating content, I was immediately interested in understanding how they process all of that information.

As it turns out, Microsoft News has been working on some pretty advanced algorithms that analyze their articles and determine how to increase personalization, which ultimately increases consumption, for years. However, when I asked them if there were any gaps, they were quick to answer that they would love more insight on their videos.

Analyzing videos at scale to obtain insights is a nontrivial task. Having insights on videos, especially for a news platform, can help with increasing search quality, user engagement through personalization, and the accessibility of videos through captioning, translating, and more. There are so many different aspects related to this: classifying different news topics (potentially even opinion detection/provider authority/sentiment), tagging various content, translating content, summarizing content, grouping similar content together, etc.

Exploring Video Indexer

I set off to determine how we could meet the requested requirements from Microsoft News by using what I thought would be a combination of Video Indexer, Cognitive Services (Text Analytics, Language Understanding, Computer Vision, Face, Content Moderator, and more), and maybe even some custom solutions.

Here are the results of my research:

|

Requested feature |

Service that could help |

|

Speech-to-text extraction |

Video Indexer API |

|

Subtitling and captions for browsing the video |

Video Indexer API |

|

For profane language detection |

Video Indexer API |

|

Visual profanity detection – moderation use case |

Video Indexer API |

|

Topic identification – based on OCR and transcript – classification use case |

Video Indexer API |

|

Ability to extract all frames and detect salient frames from keyframes using vision services (no reprocessing expected) |

Video Indexer API |

|

To choose the best frame for the promo card image |

Video Indexer API |

|

Could use to enhance the site with filmstrip for users navigating videos |

Video Indexer API |

|

Keywords/Labels/Entity extraction (Bing/Satori IDs) – based on vision and text |

Video Indexer API |

I must admit I was surprised – the Video Indexer API does an impressive job of combining the various insights that other Cognitive Service APIs could give you, such as profane language detection from the Content Moderator API or Speech-to-text from the Speech API. You can see the full list of features in the documentation.

Intrigued by how much the Video Indexer API claimed to be capable of, I decided to check it out myself. It was pretty easy to get a sample created (you can check out my sample on GitHub) in C# to create a simple console application to bulk upload and process Microsoft News videos.

For the purposes of this blog, I wanted to share the results from one video, an episode I did with the AI Show a while back on Bots and Cognitive Services. Since it’s only one video, I decided to use the Video Indexer portal, which has a simple UI for uploading and processing videos. The video is about 12 minutes long, and it took about 4 minutes to process (I checked with the team, and they reported you can expect it to take 30-50 percent of the timed length of the video to process, so this number seems about right).

Once the video was processed, I was provided with a link to a widget that I could use to embed my video with the enhanced filmstrip in a website. I’m also able to download all the insights as a JSON file. I’m able to change the language of the insights and transcripts and even just download the transcript.

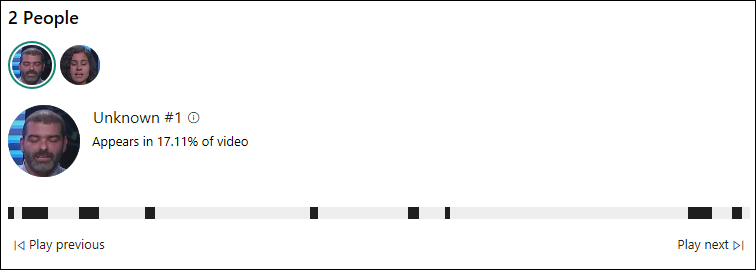

Below, you’re able to see who was in the video. And, if Rodrigo Souza and I happened to be famous (apparently we’re not and now it’s proven!), Bing would return our names and the beginning of our Wikipedia biographies.

You can see that with the widget, I can actually skip through to clips when Rodrigo is the focus and talking. There are also keywords, and similar to as with clips of people, I can skip through the video to hear the clips containing different topics.

Video Indexer creates several other enhanced filmstrips that I can use to search the video, including visual labels, brands present, emotions, and keyframes. Video Indexer also gives the option to layer the transcript. Here, I’ve chosen to include people and the transcript.

I can also decide that I want all the insights or the layered transcript in another language. See a snippet of the same layered transcript in Spanish below.

Video Indexer makes it really easy to unlock insights from videos. While this specific scenario shows how valuable using Video Indexer for news can be, I think that using Video Indexer is relevant in other scenarios as well. Here are a few other ideas of how and where:

-

In large enterprises, there are tons of documents (and videos!) circulating the intranet. Videos could be related to sales, marketing, engineering, learning, and more. As an employee, it can be hard to find the videos that you need. In efforts to reuse IP and increase the accessibility of existing materials, you could use the Video Indexer (which has Azure Search built-in) to create an enhanced pipeline for searching videos.

-

Taking the previous example one step further, you could create a custom skill in Azure Cognitive Search to get insights from videos via an index on a schedule. You may also choose to use Predefined Skills to get insights on other types of documents (i.e., images, PDFs, PowerPoints, etc.). By using Azure Search to configure an enhanced indexing pipeline, you can provide users with access to an intelligent search service for all documents. Learn more about Cognitive Search in this LearnAI workshop.

-

In the education space, sometimes a student or researcher could spend a very long time trying to find the answer to a specific question, from a lecture or presentation. With the help of Video Indexer, finding videos that contain certain keywords or topics can become easier. To take it one step further, you could use the insights obtained from the Video Indexer API to create a transfer learning model that can perform Machine Reading Comprehension (basically training a model to retrieve the answer to a question a student/researcher may have).

Have other use-cases or tips for using Video Indexer or Azure Cognitive Services? Reach out, and together we can continue to democratize AI. Follow me on Twitter and LinkedIn.

Source: Azure Blog Feed