HDInsight Metastore Migration Tool open source release now available

We are excited to share the release of the Microsoft Azure HDInsight Metastore Migration Tool (HMMT), an open-source script that can be used for applying bulk edits to the Hive metastore.

The HDInsight Metastore Migration Tool is a low-latency, no-installation solution for challenges related to data migrations in Azure HDInsight. There are many reasons why a Hive data migration may need to take place. You may need to protect your data by enabling secure transfer on your Azure storage accounts. Perhaps you will be migrating your Hive tables from WASB to Azure Data Lake Storage (ADLS) Gen2 as part of your upgrade from HDInsight 3.6 to 4.0. Or you may have decided to organize the locations of your databases, tables, and user-defined functions (UDF) to follow a cohesive structure. With HMMT, these migration scenarios and many others no longer require manual intervention.

HMMT handles Hive metadata migration scenarios in a quick, safe, and controllable environment. This blog post is divided into three sections. First, the background to HMMT is outlined with respect to the Hive metastore and Hive storage patterns. The second section covers the design of HMMT and describes initial setup steps. Finally, some sample migrations are described and solved with HMMT as a demonstration of its usage and value.

Background

The Hive metastore

The Hive metastore is a SQL database for Hive metadata such as table, database, and user defined function storage locations. The Hive metastore is provisioned automatically when an HDInsight cluster is created. Alternatively, an existing SQL database may be used to persist metadata across multiple clusters. The existing SQL database is then referred to as an external metastore. HMMT is intended to be used against external metastores to persist metadata migrations over time and across multiple clusters.

Hive storage uniform resource identifiers

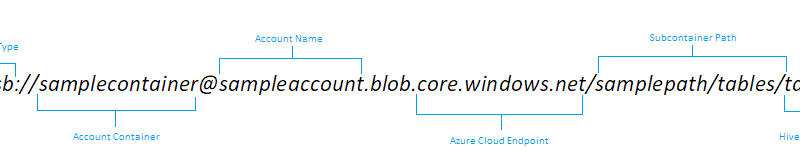

For each Hive table, database, or UDF available to the cluster, the Hive metastore keeps a record of that artifact’s location in external storage. Artifact locations are persisted in a Windows Azure Storage Blob or in Azure Data Lake Storage. Each location is represented as an Azure storage uniform resource identifier (URI), which describes the account-type, account, container, and subcontainer path that the artifact lives in. The above diagram describes the schema used to represent Hive table URIs. The same schema pattern applies to Hive databases and UDFs.

Suppose a Hive query is executed against table1. Hive will first attempt to read the table contents from the corresponding storage entry found in the Hive metastore. Hive supports commands for displaying and updating a table’s storage location:

Changing the storage location of a table requires the execution of an update command corresponding to the table of interest. If multiple table locations are to be changed, multiple update commands must be executed. Since storage locations must be updated manually, wholesale changes to the metastore can be an error-prone and time-consuming task. The location update story concerning non-table artifacts is even less favorable – the location of a database or UDF cannot be changed from within Hive. Therefore, the motivation behind releasing HMMT to the public is to provide a pain-free way to update the storage location of Hive artifacts. HMMT directly alters the Hive metastore, which is the fastest (and only) way to make changes to Hive artifacts at scale.

How HMMT works

HMMT generates a series of SQL commands that will directly update the Hive metastore based on the input parameters. Only storage URIs that match the input parameters will be affected by the script. The tool can alter any combination of Hive storage accounts, account-types, containers, and subcontainer paths. Note that HMMT is exclusively supported on HDInsight 3.6 and onwards.

Start using HMMT right away by downloading it directly from the Microsoft HDInsight GitHub page. HMMT requires no installation. Make sure the script itself is run from an IP address that is whitelisted to the Hive metastore SQL Server. HMMT can be run from any UNIX command-line that has one of the supported query clients installed. The script does not necessarily need to be run from within the HDInsight cluster. Initially supported clients are Beeline and SqlCmd. Since Beeline is supported, HMMT can be run directly from any HDInsight cluster headnode.

Disclaimer: Since HMMT directly alters the contents of the Hive metastore, it is recommended to use the script with caution and care. When executing the script, the post-migration contents of the metastore will be shown as console output in order to describe the potential impact of the execution. For the specified migration parameters to take effect, the flag “liverun” must be passed to the HMMT command. The tool launches as a dry run by default. In addition, it is strongly recommended to keep backups of the Hive metastore even if you do not intend to use HMMT. More information regarding Hive metastore backups can be found at the end of this blog.

Usage examples

HMMT supports a wide variety of use cases related to the migration and organization of Hive metadata. The benefit of HMMT is that the tool provides an easy way to make sure that the Hive metastore reflects the results of a data migration. HMMT may also be executed against a set of artifacts in anticipation of an upcoming data migration. This section demonstrates the usage and value of HMMT using two examples. One example will cover a table migration related to secure storage transfer, and the other will describe the process to migrate Hive UDF JAR metadata.

Example 1: Enabling secure transfer

Suppose your Hive tables are stored across many different storage accounts, and you have recently enabled secure transfer on a selection of these accounts. Since enabling secure transfer does not automatically update the Hive metastore, the storage URIs must be modified to reflect the change (for example, from WASB to WASBS). With your IP whitelisted and a supported client installed, HMMT will update all matching URIs with the following command:

- The first four arguments passed to the script correspond to the SQL server, database, and credentials used to access the metastore.

- The next four arguments correspond to the ‘source’ attributes to be searched for. In this case the script will affect WASB accounts Acc1, Acc2 and Acc3. There will be no filtering for the container or subcontainer path. HMMT supports WASB, WASBS, ABFS, ABFSS, and ADL as storage migration options.

- The target flag represents the table in the Hive metastore to be changed. The table SDS stores Hive table locations. Other table options include DBS for Hive databases, FUNC_RU for Hive UDFs, and SKEWED_COL_VALUE_LOC_MAP for a skewed store of Hive tables.

- The Query Client flag corresponds to the query command line tool to be used. In this case, the client of choice is Apache Beeline.

The remaining flags correspond to the ‘destination’ attributes for affected URIs. In this case, all matching URIs specified by the source options will have their account type moved to WASBS. Up to one entry per destination flag is permitted. The values of these flags are merged together to form the post-migration URI pattern.

This sample script command will only pick up table URIs corresponding to WASB accounts, where the account name is “Acc1”, “Acc2”, or “Acc3.” The container and path options are left as a wildcard, meaning that every table under any of these three accounts will have its URI adjusted. The adjustment made by the script is to set the storage type to WASBS. No other aspects of the table URIs will be affected.

Example 2: UDF JAR organization

In this example, suppose you have loaded many UDFs into Hive over time. UDFs are implemented in JAR files, which may be stored in various account containers depending on which cluster the JAR was introduced from. As a result, the table FUNC_RU will have many entries across a variety of account containers and paths. If you wanted to clean up the locations of UDF JARs, you could do so using this command:

This command will pick up UDF JAR URIs, which are exclusively found in the table FUNC_RU, in the WASB storage account “Acc1” for any container and subcontainer path. Once the script is complete, the Hive metastore will show that all JARs from that account can be found in the /jarfiles/ directory under the container “jarstoragecontainer."

Feedback and contributions

We would love to get your feedback. Please reach us with any feature requests, suggestions, and inquiries at askhdinsight@microsoft.com. We also encourage feature asks and source-code contributions to HMMT itself via the HDInsight GitHub repository.

Other resources

- HMMT GitHub repository

- Hive external metastore

- Beeline usage

- Microsoft SqlCmd

- Azure SQL Server Whitelisting

- Azure HDInsight

- HDInsight on GitHub

- MSDN forum

- Stack Overflow

- SQL Database Backup steps

- Guide to HIVE UDFs

Source: Azure Blog Feed