A deep dive into what’s new with Azure Cognitive Services

This blog post was co-authored by Tina Coll, Senior Product Marketing Manager, Azure Cognitive Services.

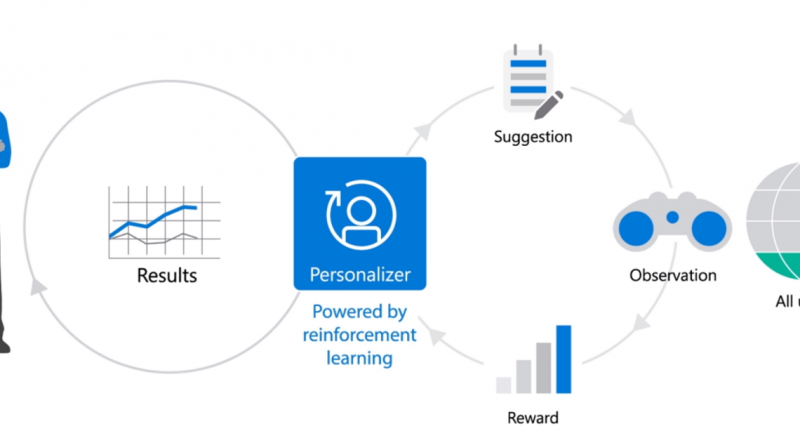

Microsoft Build 2019 marks an important milestone for the evolution of Azure Cognitive Services with the introduction of new services and capabilities for developers. Azure empowers developers to make reinforcement learning real for businesses with the launch of Personalizer. Personalizer, along with Anomaly Detector and Content Moderator, is part of the new Decision category of Cognitive Services that provide recommendations to enable informed and efficient decision-making for users.

Available now in preview and general availability (GA):

Preview

Cognitive service APIs:

- Personalizer – creates personalized user experiences

- Conversation transcription – transcribes in-person meetings in real-time

- Form Recognizer – automates data-entry

- Ink Recognizer – unlocks the potential of digital inked content

Container support for businesses AI models at the edge and closer to the data:

Generally available

Cognitive Services span the categories of Vision, Speech, Language, Search, and Decision, offering the most comprehensive portfolio in the market for developers who want to embed the ability to see, hear, translate, decide and more into their apps. With so much in store, let’s get to it.

Decision: Introducing Personalizer, reinforcement learning for the enterprise

Retail, Media, E-commerce and many other industries have long pursued the holy grail of personalizing the experience. Unfortunately giving customers more of what they want often requires stringing together various CRM, DMP, name-your-acronym platforms and running A/B tests day and night. Reinforcement learning is the set of techniques that allow AI to achieve a goal by learning from what’s happening in the world in real-time. Only Azure delivers this powerful reinforcement-learning based capability through a simple-to-use API with Personalizer.

Within Microsoft, teams are using Personalizer to enhance the user experience. Xbox saw a 40 percent lift in engagement by using Personalizer to display content to users that will most likely interest them.

Speech: In-person meetings just got better with conversation transcription

Conversation transcription, an advanced speech-to-text feature, improves meeting efficiency by transcribing conversations in real-time, enabling all participants to engage fully, capturing who said what when so you can quickly follow up on next steps. Pair conversation transcription with a device integrating the Speech Service Device SDK, now generally available, for higher-quality transcriptions. It also integrates with a variety of meeting conference solutions including Microsoft Teams and other third-party meeting software. Visit the Speech page to see more details.

Vision: Unlocking the value of your content – from forms to digital inked notes

Form Recognizer uses advanced machine learning technology to quickly and more accurately extract text and data from business’s forms and documents. With container support, this service can run on-premises and in the cloud. Automate information extraction quickly and tailor to specific content, with only 5 samples, and no manual labeling.

Ink Recognizer provides applications with the ability to recognize digital handwriting, common shapes, and the layout of inked documents. Through an API call, you can leverage Ink Recognizer to create experiences that combine the benefits of physical pen and paper with the best of the digital.

Integrated in Microsoft Office 365 and Windows, Ink Recognizer gives users freedom to create content in a natural way. Ink Recognizer in PowerPoint, converts ideas to professional looking slides in a matter of moments.

Bringing AI to the edge

In November 2018, we announced the Preview of Cognitive Services in containers that run on-premises, in the cloud or at the edge, an industry first.

Container support is now available in preview for:

With Cognitive Services in containers, ISVs and enterprises can transform their businesses with edge computing scenarios. Axon, a global leader in connected public safety technologies partnering with more than 17,000 law enforcement agencies in 100+ countries around the world, relies on Cognitive Services in containers for public safety scenarios where the difference of a second in response time matters:

“Microsoft's containers for Cognitive Services allow us to ensure the highest levels of data integrity and compliance for our law enforcement customers while enabling our AI products to perform in situations where network connectivity is limited.”

– Moji Solgi, VP of AI and Machine Learning, Axon

Fortifying the existing Cognitive Services portfolio

In addition to the new Cognitive Services, the following capabilities are generally available:

Neural Text-to-Speech now supports 5 voices and is available in 9 regions to provide customers greater language coverage and support. By changing the styles using Speech Synthesis Markup Language or the voice tuning portal, you can easily refine the voice to express different emotions or speak with different tones for various scenarios. Visit the Text-to-Speech page to “hear” more on the new voices available.

Computer Vision Read operation reads multi-page documents and contains improved capabilities for extracting text from the most common file types including PDF and TIFF.

In addition, Computer Vision has an improved image tagging model that now understands 10K+ concepts, scenes, and objects and has also expanded the set of recognized celebrities from 200K to 1M. Video Indexer has several enhancements including new AI Editor won a NAB Show Product of the Year Award in the AI/ML category at this year’s event.

Named entity recognition, a capability of Text Analytics, takes free-form text and identifies the occurrences of entities such as people, locations, organizations, and more. Through a API call, named entity recognition uses robust machine learning models to find and categorize more than twenty types of named entities in any text documents. Named entity recognition supports 19 language models available in Preview, with English and Spanish now Generally Available.

Language Understanding (LUIS) now supports multiple intents to help users better comprehend complex and compound sentences.

QnA Maker supports multi-turn dialogs, enhancing its core capability of extracting dialog from PDFs or websites.

Get started today

Today’s milestones illustrate our commitment to bringing the latest innovations in AI to the intelligent cloud and intelligent edge.

To get started building vision and search intelligent apps, visit the Azure Cognitive Services page.

Source: Azure Blog Feed