Bing Launches More Intelligent Search Features

In December, we announced new intelligent search features which tap into advances in AI to provide people with more comprehensive answers, faster.

Today, we’re excited to announce improvements to our current features, and new scenarios that get you to your answer faster.

Intelligent Answers Updates

Since December we’ve received a lot of great feedback on our experiences; based on that, we’ve expanded many of our answers to the UK, improved our quality and coverage of existing answers, and added new scenarios.

-

More answers that include relevant information across multiple sources

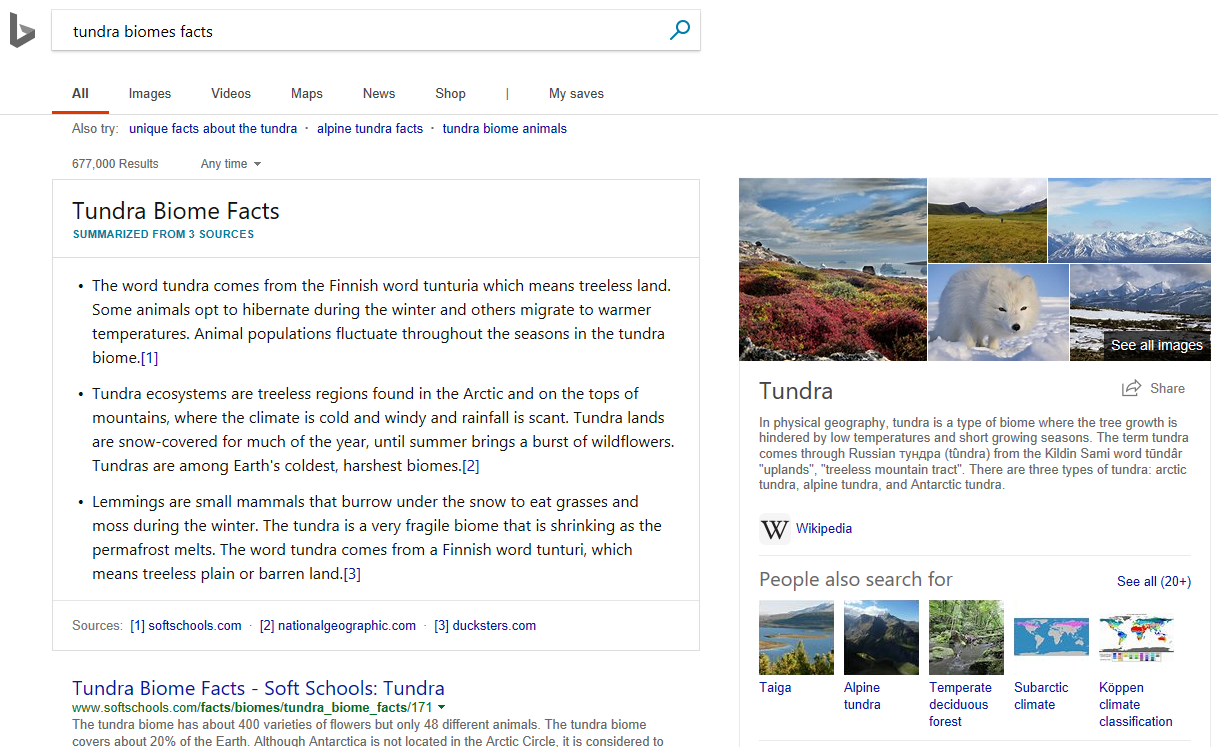

Bing now aggregates facts for given topics across several sites for you, so you can save time by learning about a topic without having to check several sources yourself. For example, if you want to learn more about tundras, simply search for “tundra biome facts” and Bing will give you facts compiled from three different sources at the top of the results page.

-

Hover-over definitions for uncommon words

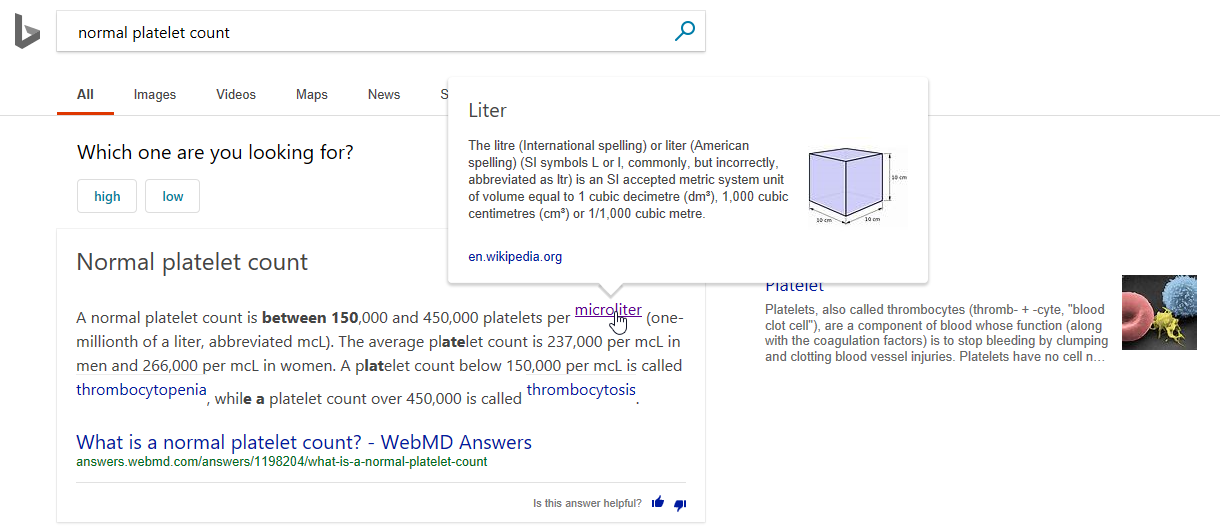

An enhancement to our intelligent answers we’re rolling out this week gives you insight into unfamiliar topics at a glance. When Bing recognizes a word that isn’t common knowledge, it will now show you its definition when you hover over with the cursor.

For example, imagine you are searching to find answers to a medical question. We've given you an answer, but there are some terms in the answer that you aren't familar with. Just hover over to get the definition without leaving the page.

-

Multiple answers for how-to questions

We received positive feedback from users including Bing Insiders who said they liked being able to view a variety of answer options in one place so they could easily decide which was best for them. We found that having multiple answers is especially helpful in situations where users struggle to write a specific enough query, such as when they have DIY questions but may not know the right words to ask. In the next few weeks, we’ll be shipping answers for how-to questions, so people can go one level deeper on their search and find the right information quickly.

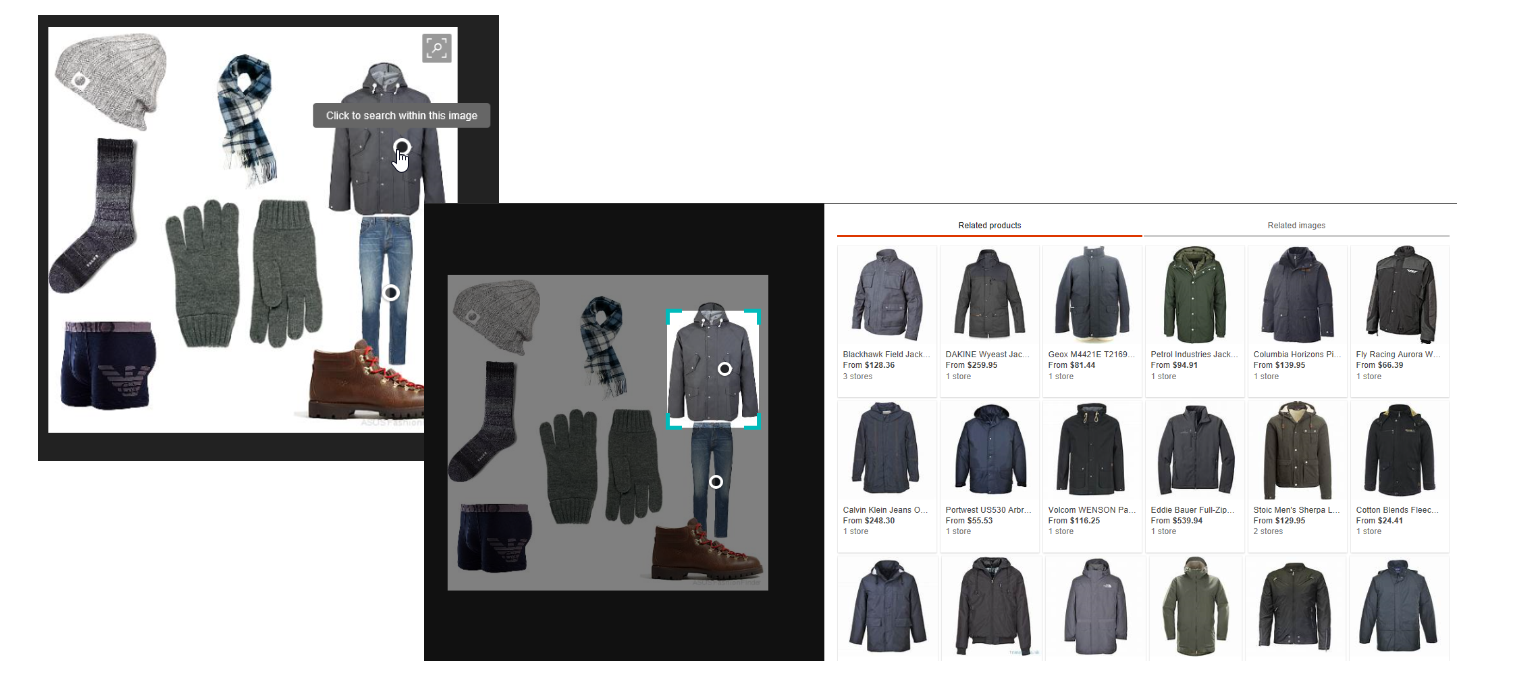

More opportunities to search within an image

Another new feature we announced in December is intelligent image search, which allows you to search within an image to find similar images and products. With intelligent image search, users can manually select a desired object by manually cropping around them if they’d like, but our built-in object detection feature makes this easier by identifying these objects and highlighting them with clickable hotspots so all you have to do is click to get matching results.

When we first launched intelligent image search, the object detection feature was mostly focused on a few fashion items, like shirts and handbags. Since then we’ve expanded our object detection to cover all common top fashion categories, so you can find and shop what you see in more places than ever before.

Advancing our intelligent capabilities with Intel FPGA

Delivering intelligent search requires tasks like machine reading comprehension at scale, which require immense computational power. So, we built it on a deep learning acceleration platform, called Project Brainwave, which runs deep neural networks on Intel® Arria® and Stratix® Field Programmable Gate Arrays (FPGAs) on the order of milliseconds.

Intel’s FPGA chips allows Bing to quickly read and analyze billions of documents across the entire web and provide the best answer to your question in less than a fraction of a second. Intel’s FPGA devices not only provide Bing the real-time performance needed to keep our search fast for our users, but also the agility to continuously and quickly innovate using more and more advanced technology to bring you additional intelligent answers and better search results. In fact, Intel’s FPGAs have enabled us to decrease the latency of our models by more than 10x while also increasing our model size by 10x.

We’re excited to disclose the performance details of two of our deep neural networks running in production today. You can read more about the advances we’ve made in the white paper, "Serving DNNs in Real Time at Datacenter Scale with Project Brainwave", and hear from the people working behind the scenes on the project:

We hope you’re as excited about these features as we are, and would love to hear your feedback!

– The Bing Team

Source: Bing Blog Feed