Ingest, prepare, and transform using Azure Databricks and Data Factory

Today’s business managers depend heavily on reliable data integration systems that run complex ETL/ELT workflows (extract, transform/load and load/transform data). These workflows allow businesses to ingest data in various forms and shapes from different on-prem/cloud data sources; transform/shape the data and gain actionable insights into data to make important business decisions.

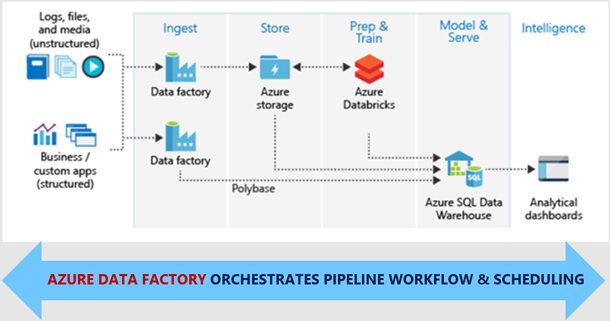

With the general availability of Azure Databricks comes support for doing ETL/ELT with Azure Data Factory. This integration allows you to operationalize ETL/ELT workflows (including analytics workloads in Azure Databricks) using data factory pipelines that do the following:

- Ingest data at scale using 70+ on-prem/cloud data sources

- Prepare and transform (clean, sort, merge, join, etc.) the ingested data in Azure Databricks as a Notebook activity step in data factory pipelines

- Monitor and manage your E2E workflow

Take a look at a sample data factory pipeline where we are ingesting data from Amazon S3 to Azure Blob, processing the ingested data using a Notebook running in Azure Databricks and moving the processed data in Azure SQL Datawarehouse.

You can parameterize the entire workflow (folder name, file name, etc.) using rich expression support and operationalize by defining a trigger in data factory.

Get started today!

We are excited for you to try Azure Databricks and Azure Data Factory integration and let us know your feedback.

Get started by clicking the Author & Monitor tile in your provisioned v2 data factory blade.

Click on the Transform data with Azure Databricks tutorial and learn step by step how to operationalize your ETL/ELT workloads including analytics workloads in Azure Databricks using Azure Data Factory.

We are continuously working to add new features based on customer feedback. Get more information and detailed steps for using the Azure Databricks and Data Factory integration.

Get started building pipelines easily and quickly using Azure Data Factory. If you have any feature requests or want to provide feedback, please visit the Azure Data Factory forum.

Source: Azure Blog Feed