Connected arms: A closer look at the winning idea from Imagine Cup 2018

This blog post was co-authored by Nile Wilson, Software Engineer Intern, Microsoft.

In an earlier post, we explored how several of the top teams at this year’s Imagine Cup had Artificial Intelligence (AI) at the core of their winning solutions. From helping farmers identify and manage diseased plants to helping the hearing-impaired, this year’s finalists tackled difficult problems that affect people from all walks of life.

In this post, we take a closer look at the champion project of Imagine Cup 2018, smartARM.

smartARM

Samin Khan and Hamayal Choudhry are the two-member team behind smartARM. The story begins with a by-chance meeting of these middle school classmates. Studying machine learning and computer vision at the University of Toronto, Samin decided to register for the January 2018 UofTHacks hackathon, and coincidentally ran into Hamayal, studying mechatronics engineering at the University of Ontario Institute of Technology. Despite his background, Hamayal was more interested in spectating than in participating. But when catching up, they realized that by combining their skillsets in computer vision, machine learning, and mechatronics, they might just be able to create something special. With this realization, they formed a team at the hackathon and have been working together since. From the initial hackathon to the Imagine Cup, Khan and Choudhry’s idea has evolved from a small project to a product designed to have an impact on the world.

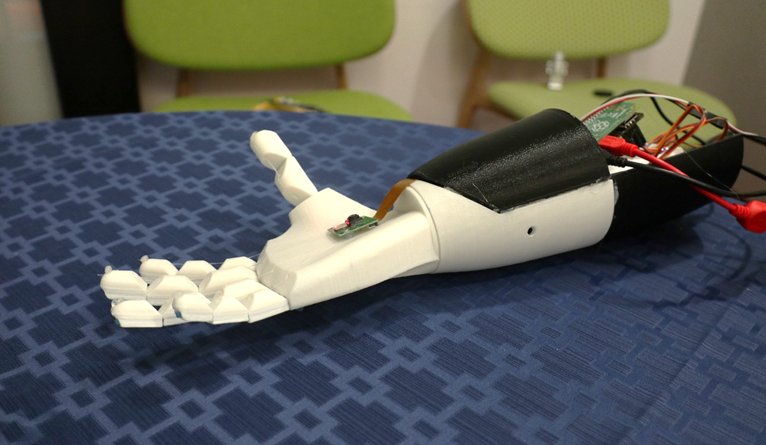

A smartARM prototype resting palm-up on a table.

In the early stages of development, Khan and Choudhry were able to work with Annelisa Bowry, who was excited to provide input for the development of a device that she could use herself as a congenital amputee. Listening to her experiences and receiving direct feedback helped the team tremendously.

While robotic upper-limb prostheses are not new, Khan and Choudhry’s AI approach innovates on the idea of prosthesis control and greatly improves the feasibility of a well-functioning, affordable robotic solution for amputees. Let’s explore how smartARM uses AI to bypass difficult challenges faced by advanced prosthesis developers today, and how this solution can impact the community.

State of the (prosthetic) arm

Before jumping into details, it’s important to understand what kinds of upper-limb prostheses are out there today. Modern prosthetic arms can be broadly sorted into two categories: functional and cosmetic.

Within the functional prosthesis category, there is a wide variety of available functions, from simple hooks to neural interfacing robotic arms. Unfortunately, higher function is often accompanied by higher cost.

When going about our daily activities, we tend to use a wide variety and combination of grasps to interact with a multitude of objects and surfaces. Low-cost and simple solutions, such as hooks, typically only perform one type of grasp. These solutions are body-powered and operated by the user moving a certain part of their body, such as their shoulder. Although these simple solutions are low-cost and can provide the user with a sense of force through cable tension, the prostheses have very limited function. There are also electrically powered alternatives for these simple arms that reduce the strain on the user, as they no longer require body movements to power the arm, but they are typically heavier and a bit costlier without providing much more function.

Neural interfacing research prosthetic arm. Photo courtesy of The Johns Hopkins University Applied Physics Laboratory.

In contrast, neural interfacing upper-limb prostheses provide greater function, including more natural control and multiple grips, but cost much more. These myoelectric prostheses pick up on changes in the electrical activity of muscles (EMG) and are programmed to interpret specific changes to then route as commands to the robotic hand. This allows the wearer to more naturally go about trying to control their arm. However, myoelectric prostheses are not yet advanced enough to allow for direct, coordinated translation of individual muscle activations to complex hand movements. Some of these myoelectric devices require surgery to move nerve endings from the end of the limb to the chest through an operation called Targeted Muscle Reinnervation (TMR).

Even without surgery, the high cost and limited availability of these myoelectric prostheses limits accessibility. Many are still in the research stage and not yet commercially available.

SmartARM aims to fill this gap between low-cost, low-function and high-cost, higher-function below-elbow prostheses through its use of low-cost materials and AI.

Simplicity and innovation

Now that we have a better understanding of what’s available on the market today, we can ask, “What makes smartARM so different?”

Unlike myoelectric prostheses, smartARM does not rely on interpreting neural signals to inform and affect hand shape for grasping. Instead of requiring users to undergo surgery or spend time training muscles or remaining nerve endings to control the prosthesis, smartARM uses AI to think for itself. Determining individual finger movement intentions and complex grasps through EMG is a non-trivial problem. By bypassing the peripheral nervous system for control, smartARM allows for easy control that is not limited by our ability to interpret specific changes in muscle activations.

The camera placed on the wrist of the smartARM allows the prosthetic limb to determine what grasp is appropriate for the object in front of it, without requiring explicit user input or EMG signals. Although the arm “thinks” for itself, it does not act by itself. The user has complete control over when the smartARM activates and changes hand position for grasping.

Although the idea of using a camera may seem simple, it’s not something that has been thoroughly pursued in the world of prosthetic arms. Some out-of-the-box thinking around how the world of prosthetics can blend with the world of computer vision might just help with the development of an affordable multi-grip solution.

Another potential standout feature about a smartARM-like solution is its ability to learn. Because it utilizes AI to determine individual finger positions to form various grips, the prosthesis can learn to recognize more types of objects as development continues alongside the wearer’s use.

How does it work?

So, how does the smartARM really work?

The camera placed on the wrist of the smartARM continuously captures live video of the view in front of the palm of the hand. This video feed is processed frame-by-frame by an onboard Raspberry Pi Zero W single-board computer. Each frame is run through a locally deployed multi-class model, developed on Microsoft Azure Machine Learning Studio, to estimate the object’s 3D geometric shape.

Various common grips associated with different shapes are stored in memory. Once the shape is determined, smartARM selects the corresponding common grip that would allow the user to properly interact with the object. However, smartARM does not change hand position until the user activates the grip.

Prior to activating the grip, the user brings the smartARM close to the object they would like to grasp, positioning the hand such that it will hold the object once the grip is activated.

To activate the grip, the user flexes a muscle they have previously calibrated with the trigger muscle sensor. The flex triggers the grip, which moves the fingers to the proper positions based on the pre-calculated grasp. The user is then able to hold the object for as long as the calibrated muscled is flexed.

AI Oriented Architecture for smartARM.

Because smartARM is cloud compatible, it is not limited to the initially loaded model and pre-calculated grips on-board. Over time, the arm can learn to recognize different types of shapes and perform a wider variety of grasps without having to make changes to the hardware.

In addition, users have the option of training the arm to perform new grasps through sending video samples to the cloud with the corresponding finger positions of the desired grip. This allows for individuals to customize the smartARM to recognize objects specific to their daily lives and actuate grips that they commonly use, but others may not.

Digitally transforming the prosthetic arm

By combining low-cost materials with the cloud and AI, Khan and Choudhry present smartARM as an affordable yet personalized alternative to state-of-the-art advanced prosthetic arms. Not only does their prosthesis allow for multiple types of grips, but it also allows for the arm to learn more grips over time without any costly hardware modifications. This has the potential to greatly impact the quality of life for users who cannot afford expensive myoelectric prostheses but still wish to use a customizable multi-grip hand.

“Imagine a future where all assistive devices are infused with AI and designed to work with you. That could have tremendous positive impact on the quality of people’s lives, all over the world.”

Joseph Sirosh, Corporate Vice President and CTO of AI, Microsoft

Khan and Choudhry’s clever, yet simple, infusion of AI into the prosthetic arm is a great example of how the cloud and AI can truly empower people.

If you have your own ideas for how AI can be used to solve problems important to you and want to know where to begin, get started at the Microsoft AI School site where you will find free tutorials on how to implement your own AI solutions. We cannot wait to see the cool AI applications you will build.

Joseph & Nile

Source: Azure Blog Feed