Introducing WebQA : A Multi-hop, Multi-modal & Open Domain Reasoning Challenge & Benchmark

Web navigation and exploration is an area of reasoning wherein users consume and assimilate information from multiple sources like text, images, or other modalities. In addition, it is multi-hop and exploratory in nature where users often use multiple sources to aggregate different facets of information. Most question answering (QA) systems treat the web primarily as a text-only landscape and this may limit the scope of information that can be extracted. For example, when searching for a query like ‘what color is the belly of a green tree frog’, surfacing the image of the green tree frog along with content associating the image to the species is much more effective than wading through pages trying to find this information documented explicitly somewhere.

Large amounts of (labeled) training data have been vital to the advancement of approaches in QA systems. Datasets are rapidly emerging to solve reasoning over multi-modal and multi-hop QA pairs as well. But most of these approaches either use pre-defined templates for curation of data or encourage a ‘question-decomposition followed by re-routing to a uni-modal model’ approach. However, if we could disentangle extracting knowledge from understanding it, then there would be no need to differentiate whether the knowledge was learned from books versus images, or whether an abstract piece of knowledge is a composite of multiple scattered fragments versus being carried by a single one.

Long term genuine progress for such systems needs reasoning over linguistic notions of meanings and visually grounded ones, ideally – under the same representation framework. This depends on the development of a unified system that indiscriminately treats text snippets and images or other information modalities as knowledge carriers. The newly proposed WebQA benchmark is aimed at advancing this goal as a multi-hop, multi-modal, open-domain question-answering system where questions are knowledge-seeking and resemble real-world use cases over text and images.

Figure 1: Example WebQA dataset pipeline

The question requires finding and reasoning about relevant sources and discarding distractors to produce the correct natural language answer

Task Formulation

Consider textual question Q = ‘What color is the belly of a green tree frog?’, a set of n sources consisting of supporting sources [s1, s2, …, sm] and a set of distractor sources [sm+1, sm+2, …, sn], where each source can be either a snippet or an image with a caption associated with it. Ideally, the task would be addressed in a single stage wherein a system jointly processes Q, s1, s2 … sn to produce A. However, there aren't any modeling approaches that can consume sufficiently large multimodal contexts to achieve this currently. With that in mind, the task can be practically broken down into a two-stage process to derive answer A. The first stage requires the model to identify the sources from which to derive the answer (i.e., multimodal information extraction). The second stage is question answering where the model takes Q, sources as context C, to generate an answer A. From the shown example (Figure 1), the sources contain several distracting pieces of information. They include colors of different types of frogs (yellow, brown, blue etc.), sometimes other parts of a frog than its belly (thighs, glands, hand etc.) and varied related images of frogs. This setting mimics real-world scenarios of retrieving and sifting through vast amount of multi-modal information sources. Furthermore, no text snippet contains the answer directly. In this image-based query example the distractors are mined such that they do not leak the correct answer. It is, therefore, not straightforward for the model to derive information about the species of the frog and the caption is needed to first identify the right image and then generate the answer using image understanding. This two-stage reasoning process is multi-hop and multi-modal.

In above example, for source retrieval phase, all distractor sources talking about other body parts of frog e.g., green/brown dorsal surface; yellow pigments etc. and other specific type of frog species e.g., European tree frog, pacific tree frog etc. should be discarded while facts supporting the association of green tree frog image should be selected. For the second phase of question answering, the model is expected to perform reasoning to infer that the belly of the green tree frog is ‘white’ using the supporting source (image + text caption) with high confidence.

WebQA Data

The first version of WebQA data is open-domain and the task is targeted to be as close as possible to real world scenarios by considering sources from open world topics and derived from prompts from real world queries. It targets queries and facts that have answers which can be found either via image search or general web (text) search. For getting rich multi-hop and multiple source questions we use crowdsourcing for curating both image and text-based examples. For both text and image examples the data has a set of both text and image-based hard negatives for models to sift through.

Answers from Images

The annotators are presented with a set of six related images and asked to produce QA-pairs by selecting one or two images per pair that are necessary to answer the question. A requirement is that at least one in the three pairs utilizes two distinct images. Additionally, they need to avoid questions that: a) are simple facts (e.g., "How many wheels does a car have"); b) are easily answered by a text-only search; c) are bound to a specific image. (c) ensures every question is meaningful without paired context.

Text distractors are based on extracting relevant passages from publicly available content based on noun chunks in the question, while limiting overlap to avoid false negatives. For image distractors, we use both the metadata and visual content to find similar images. The former relies on the Bing Image Search API while the latter uses the Bing Image Insight function for visual similarity. In total, we collect 25K image-based questions.

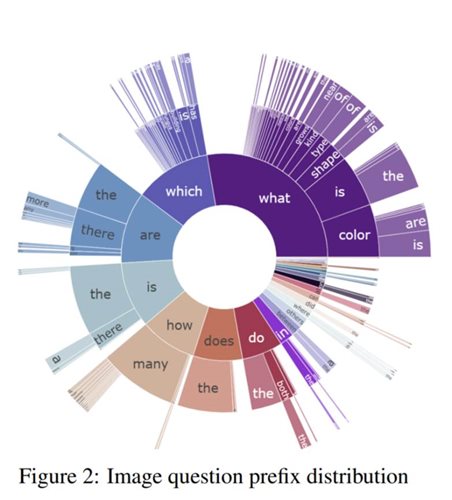

Question prefixes are visualized in Figure 2. Questions are also categorized into open and closed classes. Closed class questions include color, shape, number (i.e. “how many”), Yes/No, and “choose” (i.e. questions that ask to choose from entities with a particular property). To reward models with better generalization and reasoning the test set is constructed to be out-of-distribution when possible. For color, shape, and number questions, the answer set is partitioned to ensure that the majority class during training does not carry over to testing. For the "Yes/No" and "choose" classes, multiple models were trained on 10 random train-test splits. Consistently difficult samples across splits were considered “hard,” and placed in the test set. Finally, open-class “other” questions were randomly split between train and test.

Answers from Text

To generate diverse, yet consistent, topics for mining difficult multi-hop reasoning questions, we construct clusters of similar entities, but where text snippets had low overall n-gram overlap or semantic similarity (resulting in 8K clusters). We provide annotators with four snippets to prevent information overload, and (by request) allow them to contribute facts they researched to help answer the question. For text distractors passages are mined from publicly available content that contain noun phrases from the question and the ones with the highest lexical overlap that lack reference to the answer are chosen. For image distractors, the images and captions present on the aforementioned publicly available content pages are used, again filtering for those with high lexical overlap. In total, the set contains 25K text-based questions and since they lack clear criteria for question categorization, we do not construct an adversarial test split, but instead simply sample randomly.

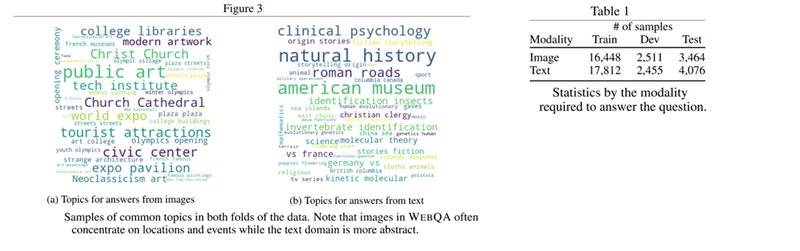

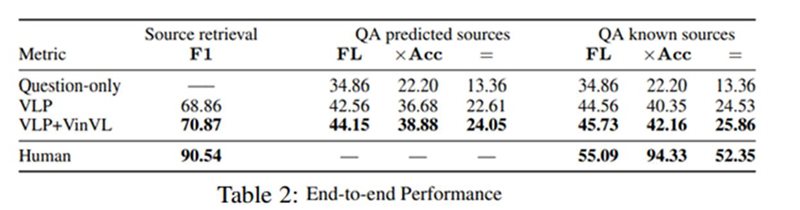

Table 1 shows the distribution of the both the Image and Text set for train, dev and test samples. Qualitatively WebQA covers a wide range of topics as can be observed from Figure 3. Also, on comparing the topic clouds, it can be observed that image-based queries relate to physical entities while text-based queries are more abstract.

Metrics

The benchmark task expects fluent and complete sentences as answers, which are appropriate for applications such as voice assistants or conversation agents. Therefore, the quality is measured as both fluency and accuracy. For each testing sample there are five full-sentence answers provided by humans. In addition, it also contains one keyword answer by asking human annotators to rephrase the full-sentence answer into a succinct minimal semantic form. We measure fluency (FL) via an adapted variation of BARTScore, a newly proposed NLG evaluation metric based on accurate measurement of paraphrase similarity. This is combined with accuracy (Acc), measured with answer keywords. Answer keywords a) detect the presence of key entities b) penalize the use of any incorrect entities c) avoid penalizing semantically relevant but superfluous words. Systems are given a combined score FL*Acc across all test samples.

New WebQA Challenge for AI Models

WebQA is a new multi-hop, multi-modal question answering challenge which is open-domain and close to real world application task settings. It is aimed at propelling development of more holistic AI models. Designed to simulate the heterogeneous information landscape one might expect when performing web search, WebQA in its first version contains 46K knowledge-seeking oriented queries whose answers are to find in either images or snippets. Hard negatives add complexity to the task by requiring a system to determine relevant sources first before reasoning. Table 2 below shows that even state-of-the-art large scale multimodal models that perform at human levels on other visual and text QA tasks, fail to perform well on WebQA, performing significantly worse than humans. In fact, humans can perform this task with ease (i.e., achieving >94 Acc and >55 FL) computed via cross-evaluation on six references provided by different annotators to prove robustness and consensus. While advanced models like VLP and VLP combined with VinVL (state-of-the-art visual representation) achieve high fluency scores, reaching human-level accuracy is not within sight.

These results reveal the prospect of model improvements that will benefit more holistic real-world applications which are increasingly multi-hop (across sources) and multi-modal (across modality options). Qualitative analysis of outputs from these advanced model failed cases for both image and text-based questions reveals some interest insights.

Both above examples for image-based QA reveal that the model can produce logically consistent and fluent sentences but the predictions of these are incorrect. The first matches the negation but the answer should have been yes, while in the second, the model runs away with a very logical hallucination (heads wear helmets) but this is due to not understanding the image.

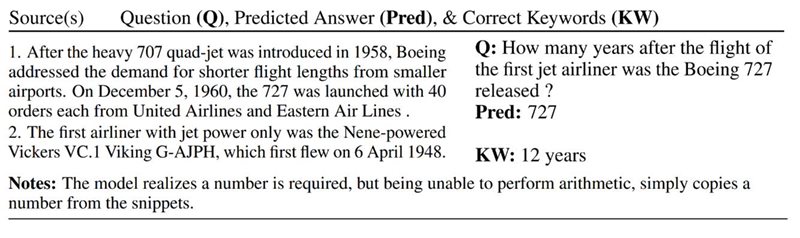

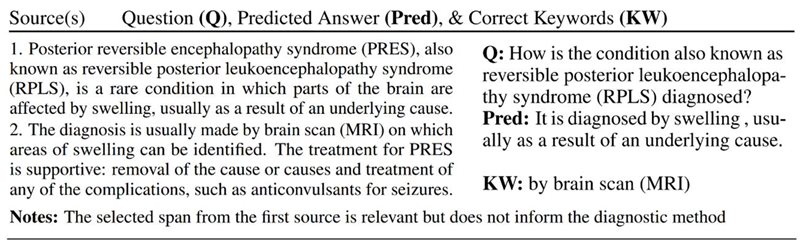

In the text examples, we see a different pattern. Here the model is more easily able to copy facts from the source texts, but still demonstrates a lack of understanding or reasoning. In the first example, the model appears to know it is looking for a number but choosing one via direct copying rather than performing the arithmetic necessary to combine both facts. In the second case, the model finds a relevant span selection (as is commonly the only thing necessary for text QA tasks) but does not understand that the question is asking about a method of diagnoses versus a symptom.

While it is well known that current models are often weak at reasoning or arithmetic, none of the questions presented here require complex information aggregation or analysis. They, as is common in the dataset, follow rather simple implication, addition, or visual extraction patterns which happen to be out of reach for current models (uni- or multi-modal).

Call to Action

WebQA not only closely mirrors our everyday experience on the web, but that it provides a playground for the community to explore important sub-challenges. Specifically, around reasoning, knowledge aggregation, rich visual understanding, and the use of a singular model across modalities. There have been a number of recent advances in document level understanding or processing of large context windows. However, WebQA presents a novel challenge in this direction, by pushing for the construction of a single model which can process and integrate information across multiple paragraphs and images despite the substantial contextual window required. We hope the work on WebQA dataset and its upcoming versions in future will drive progress on benchmarking, modeling, and evaluation in the multimodal reasoning as well as the broader AI research.

We welcome researchers to participate and engage with us to contribute to solve this exciting area of problems and to collaborate and develop more congruent and cogent reasoning models! WebQA will be part of the NeurIPS competition track and will announce the winners at the end of the competition.

Please read our paper for more details, reach out to us at @ webqna.github.io and follow the leaderboard at https://eval.ai/web/challenges/challenge-page/1255/overview.

– Yingshan Chang, Mridu Narang, Hisami Suzuki, Guihong Cao, Jianfeng Gao, Yonatan Bisk

Acknowledgments

We thank Jordi Ribas, Xiaodong Fan, Levi Melnick, Jared Fernandez, Ruotong Wu, MSR and Bing NLP group and leadership for their support, discussions, and suggestions at various stages in the project.

Source: Bing Blog Feed